Four Imperatives for Cybersecurity Success in the Digital Age: Part 2

Having joined Palo Alto Networks following a 35-year career in the U.S. military, the past decade of which I served in a variety of leadership positions in cyber operations, strategy and policy, I have found that many of the cybersecurity challenges we face from a national security perspective are the same in the broader international business world.

This blog post series describes what I consider to be four major imperatives for cybersecurity success in the digital age, regardless of whether your organization is a part of the public or private sector.

In my first blog I covered Imperative #1, and here are the major themes for each imperative:

- Imperative #1 – We must flip the scales (blogged on February 16, 2016)

- Imperative #2 – We must broaden our focus to sharpen our actions

- Imperative #3 – We must change our approach

- Imperative #4 – We must work together

Blog #2 of 4: Imperative #2

WE MUST BROADEN OUR FOCUS TO SHARPEN OUR ACTIONS

Before I get to the details, allow me to review some background and context, and then provide an executive summary of Imperative #2.

Background and Context

As a reminder from my previous two blogs, I use the four factors in Figure 1 to explain the concept behind Imperative #2 in a comprehensive way.

Figure 1

- Threat: This factor describes how the cyber threat is evolving and how we are responding to those changes

- Policy and Strategy: Given our assessment of the overall environment, this factor describes what we should be doing and our strategy to align means (resources and capabilities – or the what) and ways (methods, priorities and concepts of operations – or the how) to achieve ends (goals and objectives – or the why)

- Structure: This factor includes both organizational (human dimension) and architectural (technical dimension) aspects

- Tactics, Techniques and Procedures (TTP): This factor represents the tactical aspects of how we actually implement change where the rubber meets the road

In this second blog of the series I’d like to take you through Imperative #2 using the concept model outlined above, and step through the implications.

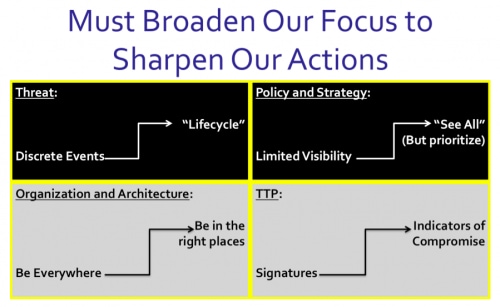

Figure 2

EXECUTIVE SUMMARY

We need to change the way we look at today’s cyber Threat because there’s a smarter approach …one that allows the cybersecurity community to broaden our focus and “see the whole forest instead of getting lost in the trees.” Instead of looking at the problem as an ever-increasing volume of discrete events we must leverage the step-by-step process that almost all cyber actors use to accomplish their intentions. This process refers to the Threat “lifecycle,” and by using the lifecycle as a lens to broaden our focus we can sharpen our actions in dealing more effectively with a limited number of cyber Threat playbooks instead of an endless number of individual cyber events.

A critical aspect of doing so requires that we achieve full visibility over what’s happening in our network enterprise environment on a continuous basis and in near real time. The increase in visibility can result in a lot more information for the Defenders to deal with, so they have to prioritize according to their most vital functions, where those functions map to their enterprise network architecture, and where the resulting key portions of the architecture are both vulnerable and there is an assessed threat (cyber or otherwise) to them. Defenders must also apply technology and automation to discover the threats using a lifecycle approach (looking Threat playbooks instead of millions of discrete events), and save their people to take active business action in a sustainable way.

By broadening our focus on the Threat lifecycle and gaining greater visibility over what’s happening in our network environment, then we can sharpen our actions by adjusting our security architecture to be where it matters instead of a legacy view that it has to be everywhere in the hopes of stopping a breach. Smart security architecture placement allows for not only greater effectiveness against a more holistic (and manageable) view of the Threat, but is also a significantly more efficient way to apply technology, save money, and employ human expertise where it is most required.

We must also sharpen our actions by evolving from the legacy thinking based on signatures toward more effective TTP that focus on indicators of compromise. There is simply no way to keep up with the exponential explosion in both the number of vulnerabilities in our network environment as well as the number of Threat signatures over time. The Internet of Things phenomenon will only exacerbate the problem by increasing the overall attack surface. However, by tracking a relatively small number of defined techniques across the cyber threat life cycle categories (or broadening our focus) we can sharpen our actions to prioritize important events, put understandable context around them, and then rapidly make these indicators consumable so that we can automate the adjustment our cybersecurity posture.

DETAILED DESCRIPTION OF IMPERATIVE #2

THREAT: We have traditionally viewed threat cyber attacks, breaches and other events as discrete events. When I was working at United States Cyber Command as the Director of Current Operations, I used to tell my leadership that we were experiencing literally millions of events (mostly probes, but many times other, more serious, events) per day. Millions!

We set up a system to triage the most serious events, assigned teams to chase them down, logged the status of each event, followed through with isolation and remediation, and then processed all this through an incident management tracking system that, over time, just ended up looking like a mountain of exponentially growing, endless work needing more and more people to keep up with.

This type of approach was only putting us further and further behind, and it began to distract our workforce from what was really important. This gets back to the “math problem” that I covered in my first blog on Imperative #1. It’s impossible to get ahead of the problem if you address the Threat using that kind of model.

What’s changed over the past few years is that we’ve collectively figured out a way to work smarter rather than harder. Adversaries, like we in the national security cyber community, use a common process and it involves a set series of steps – or a lifecycle - that just about any cyber adversary must step through to be successful.

The lifecycle involves information gathering and reconnaissance or probing, then the initial foothold into a network, then the initial compromise and deployment of an exploit or other tool, then the establishment of control of the access established, then privileged movement through the network to get to the place where they can accomplish what they came to do, and finally, the exfiltration of information or other more disruptive, deceptive or destructive results.

In most cases this takes time (at least hours, if not days/weeks/months), and when you view the adversary activity in this manner, you can see that if you put in place mechanisms to monitor your network enterprise for these various stages of the lifecycle it can be possible to watch an adversary walk through it … and on top of that, the end result is that you realize that instead of dealing with an avalanche of millions of discrete events, you are now talking about a reasonably manageable number of Threat playbooks … some estimates are that there are only thousands of these playbooks. As our company CSO Rick Howard likes to say, “You can put that on a spreadsheet!”

STRATEGY: From a strategy perspective, this requires that we look at the entire lifecycle, and not just simply at bits and pieces. I list the term limited visibility in Figure 2, but in many cases it’s more accurately defined as no visibility into what’s happening within an organization’s network.

When an organization’s IT staff does have visibility, it’s usually the result of being informed by an external entity (like the FBI or some other law enforcement of domestic security agency) that something’s amiss. That forces the staff to go find out what happened (past tense) and dig into the forensics after the fact. Nobody in the cybersecurity community wants to spend their life cleaning up the mess in aisle 9!

We need to shift the dynamic from limited or no visibility over what’s happening within our networks to seeing everything that’s happening on our networks in near real time. Some in the US government, such as the Department of Homeland Security, call this “CONTINUOUS DIAGNOSTICS AND MITIGATION” or CDM.

But, this raises an interesting point about making sense of all this new, near real time data. It can be overwhelming unless you have a way to prioritize what’s most important. How do you do that? How do you manage it without having to hire more and more people as your alerts go through the roof?

One way that I’ve seen work is to first look at the most critical functions of the organization. In the military, we called these mission critical functions – functions without which the organization would fail to achieve its mission. It would be the same for any business. Then, you have to translate those mission critical functions into where they reside on the network, and which segments of the network, systems and endpoint devices are then “mission critical” (or “cyber key terrain” as it is known in the military).

These key points within the organization’s architecture would then be assessed against two other factors (mission criticality being the first factor) … are there vulnerabilities (both cyber and non-cyber), and what is the threat (both cyber and non-cyber)?

It is at the intersection of all three factors … 1) critical to the mission, 2) vulnerable and 3) there’s an assessed threat (either general or specific) to them … that the organization should then focus its ability to continuously monitor for full visibility. Is your network security provider providing you with the capabilities to prioritize the mountains of data to make it relevant to your needs? You should be asking.

Another way to use greater visibility over what’s happening on your networks without adding to the complexity of your environment or adding tons of people (actually, what I’m about to suggest here will REDUCE the need for people, not add to it) is to apply technology and automation to discover the threats using a lifecycle approach (looking at the whole threat picture instead of millions of discrete events), and save the people you hire to use their skills to take active business action in a sustainable way.

Instead of detecting suspicious parts of a possible attack, you consider ALL of the characteristics and automatically detect one of those playbooks that I mentioned in the initial discussion about the Threat. Even if your attacker changed one characteristic, you can recognize, for example, the command and control protocols. Then, even though another part might have been changed you can block the whole attack – this particular Threat playbook. The overall playbook would be detected and blocked. Now, what if your automation was so extensive that it self learned in real time from hundreds of thousands of attacks that happen all the time in the world every day?

I’ll describe more about how this can be done using automation and an integrated platform approach in my next blog about Imperative #3.

ARCHITECTURE: In my last blog I focused on the human, organizational structure implications of the imperative for change, describing the need for organizations to move the decision making forum from the “server room into the boardroom and C-Suite.” This time I’m going to focus on the architectural structure implications of the imperative to move from being everywhere in the network, to being in the right architectural places to be effective.

Traditionally, many organizations (including several in the military of which I was a part) thought that to provide an effective defense you had to be literally everywhere, and we liked to call this concept a “layered defense.”

We learned the “m&m lesson” long ago about the insufficiency of having a strong outer shell, but being soft and gooey in the middle … which meant that once inside a cyber adversary pretty much had the run of the place.

But in our best attempts to be strong everywhere by putting point solutions all over the layers of our networks and hoping that one would catch something, we found that we were strong nowhere … and worse yet, we created so much complexity by bolting on and jury rigging multiple point solutions that weren’t natively designed to communicate with each other that we actually made the situation worse.

Smart architecture is about precision placement of the right network and endpoint device security in the architecturally relevant places based on the cyber threat life cycle. Instead of being everywhere and strong nowhere, it’s smarter to be in the right places and strong where it matters.

If we apply the “broadening our focus” aspects I just described for the threat lifecycle and greater visibility above, then we can “sharpen our actions” by adjusting your security architecture to be where it matters.

Finally, this kind of approach is not only more effective; it’s significantly more efficient because you can save resources in terms of both technology and the humans to run it. In fact, I should have put a dollar sign on the left and a cents sign on the right of that bottom left quadrant in Figure 3!

TTP: Finally, as we look at the TTP side of Imperative #2 we see something very, very powerful happening.

If you look at the number of vulnerabilities in our networks, systems, apps, etc., over time you can see it’s an exponential growth. It’s the same with malicious software signatures for anti-virus and anti-spyware, etc. Over time it is clearly exponential as well. How do you possibly keep up with that?

According to Cisco, by 2019 there will be 25 billion devices connected to the Internet. There are 16 billion today. That’s a 55% increase in your attack surface to protect.

However, there is some good news. If you look at the process or basic techniques that cyber adversaries use to do what they do, there are only a finite (less than three dozen, we believe) number of these techniques.

Everybody uses them … and I can tell you from my previous life in the military, even we used these same techniques for national security purposes.

The exciting news for the cybersecurity community is that by tracking these small, defined techniques across the cyber threat life cycle categories (or broadening our focus) we can sharpen our actions to prioritize important events, put understandable context around the indicators of compromise (like specific adversary groups or people, related indicators and targets of their activities), and then rapidly make these indicators consumable to adjust our cybersecurity posture.

This really excites me, because for the first time this offers the chance to see some daylight and it helps to rebalance the Attacker/Defender scale that I covered in Imperative #1. Dealing with three-dozen things is something we can keep up with, rather trying to sort out and deal with millions of things daily.

The faster organizations consider a model that isn’t dependent on hiring more and more people the sooner they will have a defense model that they can sustain in our changing world.

This would mean organizations wouldn’t have to keep on hiring more and more people. It would mean the people they do have could use their skills to take active business action. They would be able to keep their business secure and keep their best people engaged and employed in a model that is sustainable.

CONCLUSION

We’ve learned a smarter approach to deal effectively with a limited number of Cyber Threat playbooks instead of an endless number of discrete cyber events.

By achieving full visibility over what’s happening in our network enterprise environment on a continuous basis we can apply technology and automation to discover the threats using a lifecycle approach and save their people to take active business action in a sustainable way. But, we should prioritize that approach using the intersection of these three factors: Business/mission criticality; Vulnerabilities; and Threats.

We can sharpen our actions by adjusting our security architecture to be where it matters most instead of a legacy view that it has to be everywhere in the hopes of stopping a breach. Smart security architecture placement is not only more effective. It’s much more efficient.

Finally, we should evolve from a signature approach toward more effective TTP that focus on indicators of compromise so that we can sharpen our actions to prioritize important events, put understandable context around them, and then automate the adjustment our cybersecurity posture.

In my next blog of this series I’ll be discussing Imperative #3 – We Must Change our Approach.

Written by John A. Davis, Major General (Retired) United States Army, and Vice President and Federal Chief Security Officer (CSO) for Palo Alto Networks

Related Blogs

Subscribe to the Newsletter!

By submitting this form, you agree to our Terms of Use and acknowledge our Privacy Statement. Please look for a confirmation email from us. If you don't receive it in the next 10 minutes, please check your spam folder.